Wearable computing and the remembrance agent

Barry Crabtree and Bradley Rhodes.

Baz@info.bt.co.uk, rhodes@media.mit.edu Published in BT Technology Journal, 16(3), July 1998, pp. 118-124.Abstract

This paper gives an overview of the field of wearable computing covering the key differences between wearables and other portable computers and issues with the design and applications for wearables. There then follows a specific example, the wearable Remembrance Agent - a pro-active memory aid. The paper concludes with discussion of future directions for research and applications inspired by using the prototype.

Introduction

I am a new field engineer issued with the latest support equipment: a wearable computer that I clip to my belt with throat microphone, earpiece and head-up display that looks like a pair of normal glasses. My first job is to a repair at a large building complex which is unfamiliar to me. As I approach the complex my wearable whispers in my ear reminding me I have to report to a certain building first and gives appropriate directions from my current position. As I check in, my head-up-display reminds me about a recent fault that was fixed by another engineer, so I enquire if the fix has been suitable. As I get to the equipment for repair, a virtual reminder has been left by the installation engineer which I read on my display before starting. As I start to work notes about similar faults are brought to my attention which makes it easier to track down the problem. In this case I know I will need to liase with one of my colleagues at some point who is a specialist in this area. There are a number who I could talk to and from the background ‘noise’ from the wearable I know who and when they are available. I have finished the repair work, and it is interesting that the wearable is showing me that there were a number of faults that happened at about the same time of day indicating some other, common cause which needs to be tracked.

This scenario may sound some way off in practical terms. It is not. All the technologies needed to support it are available. It is the aim of this paper to describe some of these technologies in more detail and give an indication of their current status. The paper will start by describing features available in wearable computers that are not available in current laptops or Personal Digital Assistants (PDAs). It will then go on to describe a number of other general application areas for wearables and current wearable technologies and design needs. This is followed by a description of the Remembrance Agent (RA), a wearable memory aid that continually reminds the wearer of potentially relevant information based on the wearer's current physical and virtual context. Finally, the paper discusses extensions that are being added to the current prototype system.

What are wearable computers?

A wearable computer is not simply a computer that you wear, it is a host for an application or set of applications. It is obviously appropriate that if you can have powerful local computing power and advanced sensors then there are many potential applications that can be developed. This was not the case a few years ago, the computing power required could only be achieved with a workstation-sized machine and therefore wearables were kept in the realms of science fiction. Now they are practical propositions.

The fuzzy definition of a wearable computer is that it is a computer that is always with you, is comfortable and easy to keep and use, and is as unobtrusive as clothing. However, this "smart clothing" definition is unsatisfactory when the details are considered. Most importantly, it does not convey how a wearable computer is any different from a very small palm-top. A more specific definition is that wearable computers have many of the following characteristics:

This list, and indeed any general discussion of wearable computers, should be interpreted as guidelines rather than absolute. In particular, good wearable computing design depends greatly on the particular applications intended for the device.

Potential applications

With computer chips getting smaller and cheaper the day will soon come when the desk, lap, and palm-top computer will all disappear into a vest pocket, wallet, shoe, or anywhere a spare centimeter or two is available. So, what kinds of applications can we expect to see when the bulk of the portable PC disappears into your clothing? As the processing power increases and the machines get smaller, the applications will be limited only by the quality of the sensory, input & output and networking capability available. One thing is sure, there are likely to be a whole range of novel applications. Consider the following:

Until recently, computers have only had access to a user's current context within a computational task, but not outside of that environment. For example, a word-processor has access to the words currently typed, and perhaps files previously viewed. However, it has no way of knowing where its user is, whether she is alone or with someone, whether she is thinking or talking or reading, etc. Wearable computers give the opportunity to bring new sensors and technology into everyday life, such that these pieces of physical context information can be used by the wearable computer to provide proactive context dependent support to users.

Design needs for wearables

Taking a portable computer or PDA and re-engineering it as a wearable computer is often not appropriate. Many of the design requirements for portables no longer apply in the wearable-computing environment. This section analyses a whole range of requirements covering input, output/display, power, and comfort.

User input devices

Traditional keyboards as input devices are not appropriate on the move -- they rely on a steady surface and cannot be effectively used while walking. Traditional keyboards are also too large to be hidden from view or to be kept unobtrusive, which is important in many social situations.

One keyboard replacement currently in use is the Twiddler. This is a one-handed chord keyboard and mouse that once the chords are learnt, allows input at a rate of 50+ words per minute. However, it has to be attached to your hand, so if hands-free operation is needed for the particular application it is not appropriate. In many cases though this is an acceptable solution as it can be used on the move, is not particularly intrusive and quite robust. There are other keyboards that may be appropriate for wearables, such as the half-QWERTY (Matias 96) one-handed keyboard that exploits the symmetry of left and right hands in typing, and the BAT chording keyboard.These can be belt-mounted so you do not need to have it in your hand at all times.

Speech recognition systems such as Single word, or short phrase recognition systems are mature enough to be accurate for command driven sequences or dictation in a controlled environment. So long as it is not a problem that the wearable is controlled by speech i.e. you do not have to be quiet or control the system when in normal dialogue then speech input is possible and allows completely hands free operation.

More specialised input devices may be developed for particular applications, they may be robust menu selection devices such as the input dial (Bass et. al. 97), through to touch pads, data gloves, gesture recognition systems (Starner, Weaver, Pentland. 97), or radio controlled mouse.

Output devices

|

| Figure 1. Thad Starner wearing the Microoptical head-up display. A 320x240 image is projected into the left eye. |

Visual displays: To look at a screen on the move, the displays have to be attached close and firmly to the eye. The solutions of current practical systems, although functional, leave much to be desired in terms of aesthetics. An example of a small head-mounted display is the Private eye display as worn by Rhodes (Figure 2), which gives 720x280 monochrome resolution for very low power consumption. The majority of LCD head-up displays will give a crisp image from quarter VGA to VGA quality, with either color or 128-bit greyscale. Virtually all the approaches to head mounted displays leave it clear that you have a display in front of you. However, there have been some recent advances in embedding the display into glasses by Microoptical Inc as shown in Figure 1 which should to a large extent make head mounted displays socially acceptable. Depending on the application it may be entirely feasible to use displays that are not head mounted, say wrist mounted or on some pocket display such as the range of PDA's, in which case there is much more flexibility in the positioning and ability to make it more discrete.

Audio: Because audio does not detract the user in the same way as a screen or display interface, audio output is especially useful where the user is driving, involved in delicate operations, or may be visually impaired. D. Roy (Roy et. al.) give a thorough overview of audio as both an input and output medium. Audio is also particularly useful for conveying peripheral information

Tactile "displays" may play an important role in wearable computers. We are all familiar with pagers or mobile phones that can be made to vibrate to bring your attention to new messages. This technique could be used as a simple direction "display", with the appropriate device vibrating to point you in the right direction. More sophisticated tactile displays could be used to "draw" images on your skin, see (Tan & Pentland 97) who give a review of these and other tactile displays.

Environment sensors

One key benefit of wearable computers will be their ability to make use of the immediate environment in the wearable applications. We have already briefly mentioned applications based on augmented reality and intelligence augmentation, but they need some way of sensing the environment and the users position within that environment to be effective. Crude position location can be achieved outside with GPS systems that can give position information to a few meters (with differential signals) together with speed and direction. As soon as the user moves inside a building the problem becomes more difficult – buildings have to be adorned with some kind of location beacons that can be picked up by the wearable (Starner, Kirsh et. al. 97). Of course the wearable user could manually update his position.

Position is only one factor of potential sensors on a wearable. Simple sensors that measure temperature, humidity, noise levels, light levels, movement etc. are also available, combined with image and voice recognition systems these can provide an excellent basis to model the users environment that can provide cues for context-based applications.

Networking

There are a number of existing technologies for general purpose network connections. For general roaming cellular modems are popular in the US and give reasonable data rates (up to 19Kbits/sec). In the UK and Europe GSM is used to give 9.6Kbits/sec, but shortly the GPRS (General Packet Radio Standard) will be available to give between 70K and potentially up to 170Kbits/sec. For indoor use wireless LAN or DECT is appropriate. The Universal Mobile Telecommunications System (UMTS) may be the long term approach giving flexible bandwidth through its use of Pico, micro and macro cell architecture.

The need for a network connection will vary depending on particular applications, but networking is essential for compute-hungry or information hungry applications that cannot be achieved with processing and disk space local to the wearable.

Power

Power consumption provides a significant limitation to the application of wearable computing. More disk and processing power consumes more power, as does network connectivity and to a lesser extent the type of sensors used on the wearable. Where there is wireless network connectivity we can trade off local storage and processing power for remote storage/processing. However, with relatively meagre requirements (processing power, hard disc, manual input devices and simple display) we can have wearable systems that use readily available technology that are relatively light and last for a good number of hours on high quality lithium ion batteries. For example a Pentium P90 with 16M memory, 2.1G disk would consume about 14w. We can expect much better performance with dedicated hardware, for example the ‘Itsy’ project specifies a pocket computer based on the ARM risc processor. The table below shows comparative power consumption for these processor architectures:

|

Processor |

Power (watts) |

|

Pentium(100-200 MHz) |

10-15 |

|

Pentium(w/VRT 100-166MHz |

7 |

|

486/100 |

4-5 |

|

486/40 |

2-3 |

|

386 |

1-2 |

|

StrongArm 110(160 MHz) |

0.3 |

|

StrongArm 110(200 MHz) |

0.9 |

We must think of the wearable in terms of the application first, then build around that the appropriate interface technology. All the choices of display, input device, networking, processing & power requirements become clearer when discussed in terms of particular applications. To make concrete this view, the next section looks at one application, the wearable remembrance agent.

The Wearable Remembrance Agent

Current computer-based memory aids have been developed to make life easier for the computer, not for the person using them. For example, the two most common methods for accessing computer data is through filenames (forcing the user to recall the name of the file), and browsing (forcing the user to scan a list and recognise the name of the file). Both these methods are easy to program but require the user to do the brunt of the memory task themselves. Hierarchical directories or structured data such as calendar programs help only if the data itself is very structured, and break down whenever a file or a query does not fit into the redesigned structure. Similarly, key-word searches only work if the user can think of a set of words that uniquely identifies what is being searched for.

The Remembrance Agent, described in (Rhodes & Starner 96), which has also be re-engineered in BT to link with Microsoft Word watches what the user is reading or writing in a text editor and actively suggests personal emails, papers, or other text documents that may be relevant. This allows the user to focus on his primary task (in this case writing a report) and delegates the search for any supporting material to the Remembrance Agent. The system has been in daily use for over a year now, and the suggestions it produces are often quite useful. For example at BT, we have indexed journal abstracts from the past several years, and use the RA to suggest follow-up papers to work they are currently describing.

|

| Figure 2. `hat-top' display for the Remembrance Agent |

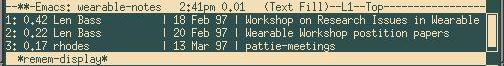

The Remembrance Agent was ported to a wearable computer over a year ago. The wearable is based on the ‘Lizzy’ design, which in this case consisted of a 486 processor running Linux with a ‘hat-top’ display and twiddler as an input device Figure 2 shows how the display is mounted, while Figure 3 shows a sample of the results from the Remembrance Agent. When the system was finally ported to a wearable computer even without modification, new applications became apparent. For example, when taking notes at a conference the remembrance agent will often suggest document that leads to questions for the speaker. Because the wearable is taken everywhere, the RA can also offer suggestions based on notes taken during coffee breaks, where laptop computers can not normally be used. Another advantage is that because the display is proactive, the wearer does not need to expect a suggestion in order to receive it. One common practice among the wearable users at conferences is to type in the name of every person met while shaking hands. The RA will occasionally remind the wearer that the person whose name was entered has actually been met before, and can even suggest the notes taken from that previous conversation. Of course, even more preferable is a wearable knows who’s in the area without having to type anything, through sensors such as automatic face recognition (Moghaddam et. al.), or active badge systems.

|

| Figure 3. Example output from the Remembrance Agent. Each line shows a suggestion. First the degree of match is shown followed by the Author, date and subject of the suggestion. |

Human memory does not operate in a space of query-response pairs. On the contrary, the context of a remembered episode provides lots of cues for recall later. These cues include the physical location of an event, who was there, what was happening at the same time, and what happened immediately before and after (Tulving 83). This information both helps us recall events given a partial context, and to associate our current environment with past experiences that might be related. The Wearable Remembrance Agent has been augmented to use, when available, automatically detected physical context to help determine relevant information. This context information is used both to tag information, and later for retrieval. Notes taken on the wearable are therefore tagged with context information and stored for later retrieval. In suggestion-mode, the wearer's current physical context is used to find relevant information. If sensor data is not available (for example if no active-badge system is in use) the wearer can still type in additional context information themselves. The current version of the Wearable Remembrance Agent uses five context cues to produce relevant suggestions:

Usability

Sometimes a suggestion summary line can be enough to jog a memory, with no further lookup necessary (see figure 3). However, often it is desirable to look up the complete reference being summarised. In these cases, a single chord can be hit on the chording keyboard to bring up any of the suggested references in the main buffer. If the suggested file is large, the RA will automatically jump to the most relevant point in the file before displaying it. The system has been in daily use on the wearable platform for over six months, and several design issues are already apparent from using this prototype. These issues will help drive the next set of revisions.

The biggest design trade-off with the RA is between making continuous suggestions versus only occasionally flashing suggestions in a more obtrusive way. The continuous display was designed to be as tolerant of false positives as possible, and to distract the wearer from the real world as little as possible. The continuous display also allows the wearer to receive a new suggestion literally in the blink of an eye rather than having to fumble with a keyboard or button (see figure to right). However, because suggestions are displayed even when no especially relevant suggestions are available, the wearer has a tendency to distrust the display. After a few weeks of use our experience suggests that the wearer tends to ignore the display except when they are looking at the screen anyway, or when they already realise that a suggestion might be available. The next version of the RA will cull low-relevancy hits entirely from the display, leaving a variable-length display with more trustworthy suggestions.

Furthermore, a "visual bell" that flashes the screen several times will accompany notifications that are judged to be too important to miss (for example, notification that a scheduled event is about to happen). This flashing is already being used in a wearable communications system on the current heads-up mounted display, and has been satisfactory in getting the wearer's attention in most cases. Another lesson learned from the interface for this communications system is that the screen should radically change when an important message is available. This way the wearer need not read any text to see if there is an important alert. Currently, the communications system prints a large reverse-video line across the lower half of the screen, which is used to quickly determine if a message has arrived.

Another trade-off has been made between showing lots of text on the screen versus showing only the most important text in larger fonts. The current design shows an entire 80 column by 25-row screen, but this often produces too much text for a wearer to scan while still trying to carry on a conversation. Future versions will experiment with variable font size and animated typography (Small 94).

Habituation has been an issue with the current system. Currently the user can set by hand how the RA should bias different features of physical context, but in the future biases should be automatically changed according to the user’s context. For example, when a user first enters a room their new location is should be an important factor in choosing information to show. After a few minutes if that location hasn’t changed, however, the RA should bias towards newer information. .

Related Work

Probably the closest system to this work is the Forget-me-not system developed at The Rank Xerox Research Centre (Lamming 94). The Forget-me-not is a PDA system that records where it's user is, who they are with, who they phone, and other such autobiographical information and stores it in a database for later query. It differs from the RA in that the RA looks at and retrieves specific textual information (rather than just a diary of events), and the RA has the capacity to be proactive in its suggestions as well as answer queries.

Several systems also exist to provide contextual cues for managing information on a traditional desktop system. For example, the Lifestreams project provides a complete file management system based on time-stamp (Freeman 96). It also provides the ability to tag future events, such as meeting times that trigger alarms shortly before they occur. Finally, several systems exist to recommend web pages based on the pages a user is currently browsing (Lieberman 95, Armstrong 95).

Conclusions

Building an effective wearable computing system needs careful consideration from the display technology, input and sensing devices. In this application we were in some ways lucky in that the Lizzy was available and had users who were happy to put up with less than ideal I/O which made it easier to design and allowed us to concentrate more on the application side and how that could be improved. The wearable RA has certainly been useful to date with the most useful feature being able to search a body of messages for appropriate text, and search on the person speaking.

It is not difficult to see that in more specialised, mobile communities, this context based reminding will prove invaluable, and that the motivating scenario described in the introduction is technically feasible.

Acknowledgements

We would like to thank Jan Nelson, who coded most of the Remembrance Agent back-end, and Jerry Bowskill for reviewing an early draft of this paper.

References

R. Armstrong, D. Freitag, T. Joachims, and T. Mitchell, 1995. WebWatcher: A Learning Apprentice for the World Wide Web, in AAAI Spring Symposium on Information Gathering, Stanford, CA, March 1995.

L. Bass, C. Kasabach, R. Martin, D. Siewiorek, A. Smailagic, J. Stivoric, 1997. The design of a wearable computer. In Proceedings of CHI ’97 Conference of Human Factors in Computing Systems. S. Pemberton (Ed.). ACM: New York. Pp. 139-146.

J.Bowskill, J.Morphett, & J.Downie, 'A Taxonomy for enhanced reality systems', Proceedings of the First International Symposium on Wearable Computing (ISWC'97), Boston, October 1997.

E. Freeman and D. Gelernter, March 1996. Lifestreams: A storage model for personal data. In ACM SIGMOD Bulletin.

M. Lamming and M. Flynn, 1994. Forget-me-not Intimate Computing in Support of Human Memory. In Proceedings of FRIEND21, '94 International Symposium on Next Generation Human Interface, Meguro Gajoen, Japan.

H. Lieberman, Letizia: An Agent That Assists Web Browsing, International Joint Conference on Artificial Intelligence, Montreal, August 1995.

T. Miah et. al. Wearable computers – an application of BT’s mobile video system for the construction industry. In BT Technology Journal, Vol 16 No 1. Jan 1998.

E. Matias, I.S. MacKenzie, W. Buxton. One-Handed Touch-Typing on a QUERTY Keyboard. In Proceedings of Human-Computer Interaction, pp 1-27 Vol 11, 1996.

B. Moghaddam, W. Wassiudin, A. Pentland. Beyond Eigenfaces: Probabilistic Matching for Face Recognition. Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, April 14-16.

J.H. Page and A.P. Breen. The Laureate text-to-speech system – architecture and applications. p57- in the BT Technology Journal Vol 14, no 1. Jan 1996.

B. Rhodes and T. Starner, 1996. Remembrance Agent: A continuously running automated information retreival system. In Proceedings of Practical Applications of Intelligent Agents and Multi-Agent Technology (PAAM '96), London, UK.

D. Roy, N Sawhney, C Schmandt, A Pentland. Nomadic Computing: A survey of interaction techniques. http://nitin.www.media.mit.edu/people/nitin/NomadicRadio/AudioWearables.html

G. Salton, ed. 1971. The SMART Retrieval System - Experiments in Automatic Document Processing. Englewood Cliffs, NJ: Prentice-Hall, Inc.

D. Small, S. Ishizaki, and M. Cooper, 1994. Typographic space. In CHI '94 Companion.

T Starner, J Weaver, A Pentland. A Wearable computer based American Sign Language recogniser. in The First International Symposium on Wearable Computers, pp 130-137. 1997.

T. Starner, S. Mann, and B. Rhodes, 1995. The MIT wearable computing web page.

T. Starner, S. Mann, B. Rhodes, J. Healey, K. Russell, J. Levine, and A. Pentland, 1995. Wearable Computing and Augmented Reality, Technical Report, Media Lab Vision and Modeling Group RT-355, MIT

T. Starner, D. Kirsh, S. Assefa. The Locust Swarm: An Environmentally-powered, Networkless Location and Messaging System IEEE International Symposium on Wearable Computing, Oct. 1997.

Tan & Pentland (ISWC paper)

E. Tulving, 1983. Elements of episodic memory. Clarandon Press.

|

Bio for Barry Crabtree:

I joined BT in 1980 after completing a Physics degree at Bath University. After a number of years in software development I moved into the field of artificial intelligence (AI) and helped begin BT's systems in automated diagnosis and workforce management. I moved on to work in distributed AI, optimisation problems and adaptive systems.

I am currently a Technical Advisor in Applications, Research & Technology (ART), and have particular interest in Intelligent Agents and wearable computers.

|

Bio for Bradley. J. Rhodes:

I'm in the Software Agents group at the Media Lab, and my particular focus is on designing "remembrance agents" that provide just-in-time information based on your current environment. The work tends to cross several disciplines, from Artificial Intelligence and Cognitive Science to Human Computer Interaction and design. Before my current research I worked on building autonomous characters for interactive fiction and automatic storytelling systems.